Fitting distributions using primarycensorseddist and cmdstan

Sam Abbott

Source:vignettes/fitting-dists-with-stan.Rmd

fitting-dists-with-stan.Rmd1 Introduction

1.1 What are we going to do in this Vignette

In this vignette, we’ll demonstrate how to use primarycensoreddist in conjunction with Stan for Bayesian inference of epidemiological delay distributions. We’ll cover the following key points:

- Simulating censored delay distribution data

- Fitting a naive model using cmdstan

- Evaluating the naive model’s performance

- Fitting an improved model using

primarycensoreddistfunctionality - Comparing the

primarycensoreddistmodel’s performance to the naive model

1.2 What might I need to know before starting

This vignette builds on the concepts introduced in the Getting Started with primarycensoreddist vignette and assumes familiarity with using Stan tools as covered in the How to use primarycensoreddist with Stan vignette.

2 Simulating censored and truncated delay distribution data

We’ll start by simulating some censored and truncated delay distribution data. We’ll use the rprimarycensoreddist function (actually we will use the rpcens alias for brevity).

set.seed(123) # For reproducibility

# Define the true distribution parameters

n <- 2000

meanlog <- 1.5

sdlog <- 0.75

# Generate varying pwindow, swindow, and obs_time lengths

pwindows <- sample(1:2, n, replace = TRUE)

swindows <- sample(1:2, n, replace = TRUE)

obs_times <- sample(8:10, n, replace = TRUE)

# Function to generate a single sample

generate_sample <- function(pwindow, swindow, obs_time) {

rpcens(

1, rlnorm,

meanlog = meanlog, sdlog = sdlog,

pwindow = pwindow, swindow = swindow, D = obs_time

)

}

# Generate samples

samples <- mapply(generate_sample, pwindows, swindows, obs_times)

# Create initial data frame

delay_data <- data.frame(

pwindow = pwindows,

obs_time = obs_times,

observed_delay = samples,

observed_delay_upper = samples + swindows

) |>

mutate(

observed_delay_upper = pmin(obs_time, observed_delay_upper)

)

head(delay_data)## pwindow obs_time observed_delay observed_delay_upper

## 1 1 9 3 4

## 2 1 9 4 5

## 3 1 8 6 7

## 4 2 10 6 8

## 5 1 10 8 10

## 6 2 8 4 5

# Aggregate to unique combinations and count occurrences

# Aggregate to unique combinations and count occurrences

delay_counts <- delay_data |>

summarise(

n = n(),

.by = c(pwindow, obs_time, observed_delay, observed_delay_upper)

)

head(delay_counts)## pwindow obs_time observed_delay observed_delay_upper n

## 1 1 9 3 4 37

## 2 1 9 4 5 31

## 3 1 8 6 7 16

## 4 2 10 6 8 26

## 5 1 10 8 10 22

## 6 2 8 4 5 43

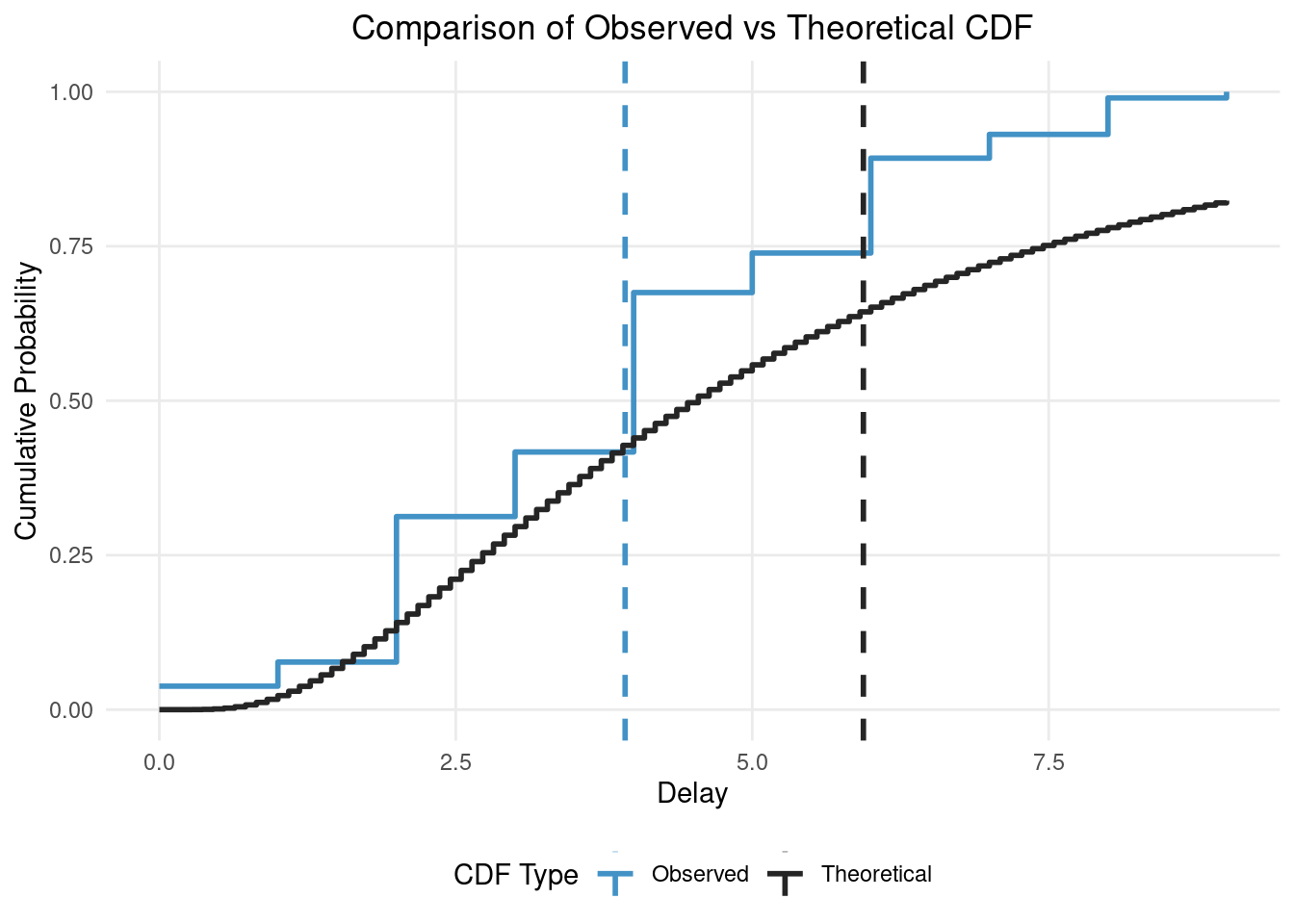

# Compare the samples with and without secondary censoring to the true

# distribution

# Calculate empirical CDF

empirical_cdf <- ecdf(samples)

# Create a sequence of x values for the theoretical CDF

x_seq <- seq(min(samples), max(samples), length.out = 100)

# Calculate theoretical CDF

theoretical_cdf <- plnorm(x_seq, meanlog = meanlog, sdlog = sdlog)

# Create a long format data frame for plotting

cdf_data <- data.frame(

x = rep(x_seq, 2),

probability = c(empirical_cdf(x_seq), theoretical_cdf),

type = rep(c("Observed", "Theoretical"), each = length(x_seq)),

stringsAsFactors = FALSE

)

# Plot

ggplot(cdf_data, aes(x = x, y = probability, color = type)) +

geom_step(linewidth = 1) +

scale_color_manual(

values = c(Observed = "#4292C6", Theoretical = "#252525")

) +

geom_vline(

aes(xintercept = mean(samples), color = "Observed"),

linetype = "dashed", linewidth = 1

) +

geom_vline(

aes(xintercept = exp(meanlog + sdlog^2 / 2), color = "Theoretical"),

linetype = "dashed", linewidth = 1

) +

labs(

title = "Comparison of Observed vs Theoretical CDF",

x = "Delay",

y = "Cumulative Probability",

color = "CDF Type"

) +

theme_minimal() +

theme(

panel.grid.minor = element_blank(),

plot.title = element_text(hjust = 0.5),

legend.position = "bottom"

)

We’ve aggregated the data to unique combinations of pwindow, swindow, and obs_time and counted the number of occurrences of each observed_delay for each combination. This is the data we will use to fit our model.

3 Fitting a naive model using cmdstan

We’ll start by fitting a naive model using cmdstan. We’ll use the cmdstanr package to interface with cmdstan. We define the model in a string and then write it to a file as in the How to use primarycensoreddist with Stan vignette.

writeLines(

"data {

int<lower=0> N; // number of observations

vector[N] y; // observed delays

vector[N] n; // number of occurrences for each delay

}

parameters {

real mu;

real<lower=0> sigma;

}

model {

// Priors

mu ~ normal(1, 1);

sigma ~ normal(0.5, 1);

// Likelihood

target += n .* lognormal_lpdf(y | mu, sigma);

}",

con = file.path(tempdir(), "naive_lognormal.stan")

)Now let’s compile the model

naive_model <- cmdstan_model(file.path(tempdir(), "naive_lognormal.stan"))and now let’s fit the compiled model.

naive_fit <- naive_model$sample(

data = list(

# Add a small constant to avoid log(0)

y = delay_counts$observed_delay + 1e-6,

n = delay_counts$n,

N = nrow(delay_counts)

),

chains = 4,

parallel_chains = 4,

refresh = ifelse(interactive(), 50, 0),

show_messages = interactive()

)

naive_fit## Warning: NAs introduced by coercion## variable mean median sd mad q5 q95 rhat ess_bulk

## lp__ -381721.39 -381721.00 1.04 0.00 -381723.00 -381720.00 1.00 1641

## mu -0.60 -0.60 0.01 0.01 -0.62 -0.58 1.00 1331

## sigma 5.10 5.10 0.01 0.01 5.09 5.12 1.00 4340

## ess_tail

## NA

## 1521

## 2759We see that the model has converged and the diagnostics look good. However, just from the model posterior summary we see that we might not be very happy with the fit. mu is smaller than the target 1.5 and sigma is larger than the target 0.75.

4 Fitting an improved model using primarycensoreddist

We’ll now fit an improved model using the primarycensoreddist package. The main improvement is that we will use the primary_censored_dist_lpdf function to fit the model. This is the Stan version of the pcens() function and accounts for the primary and secondary censoring windows as well as the truncation. We encode that our primary distribution is a lognormal distribution by passing 1 as the dist_id parameter and that our primary event distribution is uniform by passing 1 as the primary_dist_id parameter. See the Stan documentation for more details on the primary_censored_dist_lpdf function.

writeLines(

"

functions {

#include primary_censored_dist.stan

#include primary_censored_dist_analytical_cdf.stan

#include expgrowth.stan

}

data {

int<lower=0> N; // number of observations

array[N] int<lower=0> y; // observed delays

array[N] int<lower=0> y_upper; // observed delays upper bound

array[N] int<lower=0> n; // number of occurrences for each delay

array[N] int<lower=0> pwindow; // primary censoring window

array[N] int<lower=0> D; // maximum delay

}

transformed data {

array[0] real primary_params;

}

parameters {

real mu;

real<lower=0> sigma;

}

model {

// Priors

mu ~ normal(1, 1);

sigma ~ normal(0.5, 0.5);

// Likelihood

for (i in 1:N) {

target += n[i] * primary_censored_dist_lpmf(

y[i] | 1, {mu, sigma},

pwindow[i], y_upper[i], D[i],

1, primary_params

);

}

}",

con = file.path(tempdir(), "primarycensoreddist_lognormal.stan")

)Now let’s compile the model

primarycensoreddist_model <- cmdstan_model(

file.path(tempdir(), "primarycensoreddist_lognormal.stan"),

include_paths = pcd_stan_path()

)Now let’s fit the compiled model.

primarycensoreddist_fit <- primarycensoreddist_model$sample(

data = list(

y = delay_counts$observed_delay,

y_upper = delay_counts$observed_delay_upper,

n = delay_counts$n,

pwindow = delay_counts$pwindow,

D = delay_counts$obs_time,

N = nrow(delay_counts)

),

chains = 4,

parallel_chains = 4,

refresh = ifelse(interactive(), 50, 0),

show_messages = interactive()

)

primarycensoreddist_fit## variable mean median sd mad q5 q95 rhat ess_bulk ess_tail

## lp__ -3422.78 -3422.46 1.06 0.73 -3425.06 -3421.80 1.00 1357 1504

## mu 1.55 1.54 0.05 0.04 1.47 1.62 1.00 1236 1308

## sigma 0.78 0.78 0.03 0.03 0.73 0.83 1.00 1280 1149We see that the model has converged and the diagnostics look good. We also see that the posterior means are very near the true parameters and the 90% credible intervals include the true parameters.

5 Using pcd_cmdstan_model() for a more efficient approach

While the previous approach works well, primarycensoreddist provides a more efficient and convenient model which we can compile using pcd_cmdstan_model(). This approach not only saves time in model specification but also leverages within chain parallelisation to make best use of your machine’s resources. Alongside this we also supply a convenience function pcd_as_cmdstan_data() to convert our data into a format that can be used to fit the model and supply priors, bounds, and other settings.

Let’s use this function to fit our data:

# Compile the model with multithreading support

pcd_model <- pcd_cmdstan_model(cpp_options = list(stan_threads = TRUE))

pcd_data <- pcd_as_cmdstan_data(

delay_counts,

delay = "observed_delay",

delay_upper = "observed_delay_upper",

relative_obs_time = "obs_time",

dist_id = 1, # Lognormal distribution

primary_dist_id = 1, # Uniform primary distribution

param_bounds = list(lower = c(-Inf, 0), upper = c(Inf, Inf)),

primary_param_bounds = list(lower = numeric(0), upper = numeric(0)),

priors = list(location = c(1, 0.5), scale = c(1, 0.5)),

primary_priors = list(location = numeric(0), scale = numeric(0)),

use_reduce_sum = TRUE # use within chain parallelisation

)

pcd_fit <- pcd_model$sample(

data = pcd_data,

chains = 4,

parallel_chains = 2, # Run 2 chains in parallel

threads_per_chain = 2, # Use 2 cores per chain

refresh = ifelse(interactive(), 50, 0),

show_messages = interactive()

)

pcd_fit## variable mean median sd mad q5 q95 rhat ess_bulk ess_tail

## lp__ -3422.77 -3422.45 1.02 0.74 -3424.82 -3421.80 1.00 1294 1981

## params[1] 1.55 1.54 0.05 0.05 1.48 1.63 1.00 1140 1169

## params[2] 0.78 0.78 0.03 0.03 0.73 0.83 1.00 1095 1150In this model we have a generic params vector that contains the parameters for the delay distribution. In this case these are mu and sigma from the last example. We also have a primary_params vector that contains the parameters for the primary distribution. In this case this is empty as we are using a uniform distribution.

We see again that the model has converged and the diagnostics look good. We also see that the posterior means are very near the true parameters and the 90% credible intervals include the true parameters as with the manually written model. Note that we have set parallel_chains = 2 and threads_per_chain = 2 to demonstrate within chain parallelisation. Usually however you would want to use parallel_chains = <number of chains> and then use the remainder of the available cores for within chain parallelisation by setting threads_per_chain = <remaining cores / number of chains> to ensure that you make best use of your machine’s resources.